When to Fix & When to Find Out – Friday Philosophy July 15, 2011

Posted by mwidlake in AWR, Friday Philosophy, Testing.Tags: knowledge, Testing

trackback

{warning, this is one of my long, rambling Friday Philosophy blogs. Technical blogs are on the way – though there are some nice AWR screen shots in this one 🙂 }

As a DBA (or System Administrator or Network admin or any other role that involves fixing things that are broken before the shouting starts) there is often a tension between two contending “best practices”:

– getting the system working again as soon as possible.

or

– understanding exactly what the problem is.

Some experts point out {and I generally agree} that unless you actually find out exactly what the problem was, what you did to fix it and via a test case demonstrate why the fix worked, you are not really solving the problem – You are just making it go away. You (hopefully!) know what you changed or the action you took so you have something that you can repeat which might fix it again next time. (NB this does not apply to the classic “turn it off, turn it back on again”, that nearly always is just an aversion therapy).

But it might not fix it next time.

Or you might be going to way more trouble to fix it than is needed {I’m remembering how flushing the shared pool used to get touted as a way to resolve performance issues, but it is a pretty brutal thing to do to a production database}.

You might even be making other problems worse {like slowing down everything on the production database as the caches warm up again, having flushed out all that data in the SGA, or that good-old-standard of rebuilding indexes that simply do not benefit from the rebuild}.

There is another issue with “just fixing it by trying things” in that you are not really learning about the cause of the issue or about how the technology you are looking after works. A big part of what makes an “expert” an expert is the drive, desire and opportunity to take the time to work this out. It’s that last part I sometimes get grumpy about, the opportunity.

For many of us, we do not have the luxury of investigating the root cause. Because we are under so much pressure to “get it fixed right now and worry about the detail later”. But we do not get to the detail as there is then the next “fix this problem right now” and so it goes on. {Kellyn Pot’Vin does a nice post about what she calls the Superman Conundrum on this topic}.

I’ve had exactly this dilema just a couple of months ago. Below are the details, it’s a bit long so skip to the end of the post if you like…

I was creating test data and I decided to use parallel processing to speed it up. I created a month’s worth of data with PL/SQL and then decided to copy it with a simple “insert into …select” statement, updating dates and a couple of other incrementing columns as part of the insert, using parallel. The creation of the second month’s data took longer than the PL/SQL code for the first month took. What the!!!??? I pulled up the AWR information and I could see that the problem was (possibly) with inter process communication between the parallel processes, as shown by the PX DEQ CREDIT:send blkd wait event.

The below screenshot shows the overall instance workload, the green is CPU and the Pink is “Other”. Only one SQL statement is responsible for all of this effort, via 5 sessions (four of which are parallel threads) You can see that the issue had been going on for over an hour {oh, and to a certain extent these pretty pictures are pointless – I am not looking for the exact solution now, but having loaded the pictures up to the blog site I am damn well going to pretty-up my blog with them}:

Drilling into that one session shows that the bulk of the waits by far is for PX DEq Credit: Send blkd:

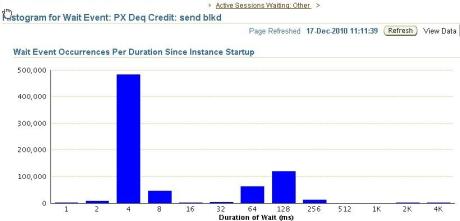

By selecting that wait event, I got the histogram of wait times since the system started {I love those little histograms of wait times}:

Note that these waits are for long periods of time, around 1/10 of a second on average and some are for up to 2 or 4 seconds.

The thing is, I had anticipated this problem and increased my PARALLEL_EXECUTION_MESSAGE_SIZE to 16K from the default of 2K already, as I knew from experience that the default is way to small and has slowed down parallel execution for me before. So why was I seeing poorer performane now than I anticipated? I’m not understanding stuff. So I needed to change one thing and see what impact it has and repeat until I got to the bottom of it.

Except I could not – the next team in line was waiting for this data and they already had massive pressure to deliver. My job, what my employer was giving me money to do, was to fix this problem and move on. So, in this instance I just pragmatically got on with getting the task done as quickly as I could.

I did what we all do {don’t we?} under times of accute time pressure. I made a handful of changes, using the knowledge I already have and guessing a little, hoping that one of them would do the trick. This included reducing the degree of parallelism, adding the /*+ append */ hint (I simply forgot the first time around), pre-allocating the required space to the tablespace, muttering “pleaseopleaseoplease” under my breath….

The job ran in less than 20 minutes and used less resource during that time. Well, it waited less anyway.

The wait histograms show lots and lots of shorter duration waits:

The duplication took 20 minutes when the previous attempt had been terminated after 2 hours when other factors forced it to be curtailed. Job done.

But the thing is, the problem was not fixed. I got the task done in a timescale that was required, I satisfied the need of the client, but I was and am not sure exactly why.

If I was a permanent employee I would consider pressing for being allowed to spend some time working this out, as my employer benefits from me extending my knowledge and skills. This is not always a successful strategy 🙂 {but it does indicate to me that my employer has a Problem and I need to address that}. In any case, as I was a consultant on this job, I was being paid to already know stuff. So it is now down to me, some personal time, the internet and people more knowledgeble than me who I can ask for help to sort this out.

And that was my main point. Often at work you have to get over the issue. Later on, you work out what the issue was. I wish I could get paid for the latter as well as the former. The real blow for me is that I no longer have access to that site and the information. My job was complete and, whether they have really shut down my access or not, I am not allowed to go back into the systems to dig around. I think I now know the issue, but I can’t prove it.

Hi,

Could it be the case that parallel dml is not enabled? You have to enable parallel dml at the session level, and it is not enabled by default.

Regards Jan

Hi Jan,

Thanks for that suggestion. You are actually right that I had not enabled parallel DML for that session {and it is a very good point to highlight} – but it was not one of the things I changed on that day (I changed it about 7 days later when Doug Burns reminded me about it ). And yes, I felt like a right idiot for forgetting it. However, in this case it was something else that made things better. But the point of this Friday Philosophy is not fixing the problem but the way one fixed it.

I might do a follow up on the actual technical lessons to learn from the whole process, but I did not salvage all the info from the site before I left, so it would be a little “made up” and not provable. Oddly, one of the lessons was how slow some PL/SQL functions can be…. 🙂

The problem here (as mentioned by Jan) is that the insert was not using PDML. As a result you saw “PX Deq Credit : send blkd”. What this tells you is that the producers (in this case the select side of the query) want to send their rows to the consumer (the QC here), but the QC can not accept them because he is too busy – so they must wait. By using the APPEND hint, you made the QC do less work (the logging, etc), so it did provide some relief, but it did not make the wait event go away, it was just less prevalent as you mentioned. Hope that helps with the root cause analysis.

Hi Greg,

Thanks for that and you are spot on. Yes, the fact that I am not using parallel DML in the example is correct (as I said in my reply to Jan) but it was not enabled in the faster run either. What sped up this process was a mixture of things but I had not specifically fixed the problem – I had put a band aid on it by speeding up the action of writing the data. But it got the job done for the client and allowed the downstream teams to keep working, so I had achieved the aim and had to immediately move on to the next crisis.

In fact, in this case enabling parallel DML did not make this task run as fast as you would think (and I would expect) Greg. As I said, I realised my fundamental error a few days later and did some tests over Christmas but did not see the speed up. I think I’m going to have to trawl through my scrappy notes and try to pull together a follow-up posting in the technical side of this but again it just tails off into an idea of what was wrong that was not fully proven as the work came to an end.

>I’m remembering how flushing the shared pool used to get touted as a way to resolve performance issues,

>but it is a pretty brutal thing to do to a production database

It gets flushed three times a day here… I can’t convince them to stop 🙂

I suppose my point is that once a process, no matter how flawed, gets embedded, the FUD to change that process can be near insurmountable – “it works”.

That’s why you need to get to the bottom of things and have the right solution to the known problem.

Although that’s not really relevant to your one-off issue above.

Hi Dom,

“Ouch!” Why not go the whole hog and just bounce the database every morning, lunchtime and evening. Hey, remember the days when unix boxes would have a controlled restarte every weekend or month to clear things up?

You are right of course, once a process is in place it is very hard to challenge that process. It’s a similar issue to that of “why identify the root cause of a ‘solved’ issues”. Because it is not solved, the situation is sub-optimal. But the business does not want optimal, it wants ‘good enough’. Actually, sometimes that is fine, but do it all the time and your whole solution/system/IT department is ‘good enough’. Way below ‘Great’.