When to Fix & When to Find Out – Friday Philosophy July 15, 2011

Posted by mwidlake in AWR, Friday Philosophy, Testing.Tags: knowledge, Testing

8 comments

{warning, this is one of my long, rambling Friday Philosophy blogs. Technical blogs are on the way – though there are some nice AWR screen shots in this one 🙂 }

As a DBA (or System Administrator or Network admin or any other role that involves fixing things that are broken before the shouting starts) there is often a tension between two contending “best practices”:

– getting the system working again as soon as possible.

or

– understanding exactly what the problem is.

Some experts point out {and I generally agree} that unless you actually find out exactly what the problem was, what you did to fix it and via a test case demonstrate why the fix worked, you are not really solving the problem – You are just making it go away. You (hopefully!) know what you changed or the action you took so you have something that you can repeat which might fix it again next time. (NB this does not apply to the classic “turn it off, turn it back on again”, that nearly always is just an aversion therapy).

But it might not fix it next time.

Or you might be going to way more trouble to fix it than is needed {I’m remembering how flushing the shared pool used to get touted as a way to resolve performance issues, but it is a pretty brutal thing to do to a production database}.

You might even be making other problems worse {like slowing down everything on the production database as the caches warm up again, having flushed out all that data in the SGA, or that good-old-standard of rebuilding indexes that simply do not benefit from the rebuild}.

There is another issue with “just fixing it by trying things” in that you are not really learning about the cause of the issue or about how the technology you are looking after works. A big part of what makes an “expert” an expert is the drive, desire and opportunity to take the time to work this out. It’s that last part I sometimes get grumpy about, the opportunity.

For many of us, we do not have the luxury of investigating the root cause. Because we are under so much pressure to “get it fixed right now and worry about the detail later”. But we do not get to the detail as there is then the next “fix this problem right now” and so it goes on. {Kellyn Pot’Vin does a nice post about what she calls the Superman Conundrum on this topic}.

I’ve had exactly this dilema just a couple of months ago. Below are the details, it’s a bit long so skip to the end of the post if you like…

I was creating test data and I decided to use parallel processing to speed it up. I created a month’s worth of data with PL/SQL and then decided to copy it with a simple “insert into …select” statement, updating dates and a couple of other incrementing columns as part of the insert, using parallel. The creation of the second month’s data took longer than the PL/SQL code for the first month took. What the!!!??? I pulled up the AWR information and I could see that the problem was (possibly) with inter process communication between the parallel processes, as shown by the PX DEQ CREDIT:send blkd wait event.

The below screenshot shows the overall instance workload, the green is CPU and the Pink is “Other”. Only one SQL statement is responsible for all of this effort, via 5 sessions (four of which are parallel threads) You can see that the issue had been going on for over an hour {oh, and to a certain extent these pretty pictures are pointless – I am not looking for the exact solution now, but having loaded the pictures up to the blog site I am damn well going to pretty-up my blog with them}:

Drilling into that one session shows that the bulk of the waits by far is for PX DEq Credit: Send blkd:

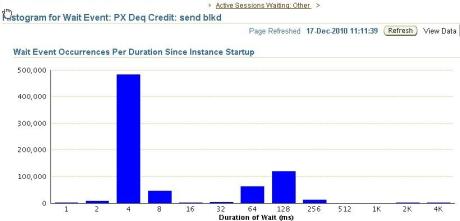

By selecting that wait event, I got the histogram of wait times since the system started {I love those little histograms of wait times}:

Note that these waits are for long periods of time, around 1/10 of a second on average and some are for up to 2 or 4 seconds.

The thing is, I had anticipated this problem and increased my PARALLEL_EXECUTION_MESSAGE_SIZE to 16K from the default of 2K already, as I knew from experience that the default is way to small and has slowed down parallel execution for me before. So why was I seeing poorer performane now than I anticipated? I’m not understanding stuff. So I needed to change one thing and see what impact it has and repeat until I got to the bottom of it.

Except I could not – the next team in line was waiting for this data and they already had massive pressure to deliver. My job, what my employer was giving me money to do, was to fix this problem and move on. So, in this instance I just pragmatically got on with getting the task done as quickly as I could.

I did what we all do {don’t we?} under times of accute time pressure. I made a handful of changes, using the knowledge I already have and guessing a little, hoping that one of them would do the trick. This included reducing the degree of parallelism, adding the /*+ append */ hint (I simply forgot the first time around), pre-allocating the required space to the tablespace, muttering “pleaseopleaseoplease” under my breath….

The job ran in less than 20 minutes and used less resource during that time. Well, it waited less anyway.

The wait histograms show lots and lots of shorter duration waits:

The duplication took 20 minutes when the previous attempt had been terminated after 2 hours when other factors forced it to be curtailed. Job done.

But the thing is, the problem was not fixed. I got the task done in a timescale that was required, I satisfied the need of the client, but I was and am not sure exactly why.

If I was a permanent employee I would consider pressing for being allowed to spend some time working this out, as my employer benefits from me extending my knowledge and skills. This is not always a successful strategy 🙂 {but it does indicate to me that my employer has a Problem and I need to address that}. In any case, as I was a consultant on this job, I was being paid to already know stuff. So it is now down to me, some personal time, the internet and people more knowledgeble than me who I can ask for help to sort this out.

And that was my main point. Often at work you have to get over the issue. Later on, you work out what the issue was. I wish I could get paid for the latter as well as the former. The real blow for me is that I no longer have access to that site and the information. My job was complete and, whether they have really shut down my access or not, I am not allowed to go back into the systems to dig around. I think I now know the issue, but I can’t prove it.

Why is my SYSAUX Tablespace so Big? Statistics_level=ALL June 2, 2011

Posted by mwidlake in AWR, performance.Tags: AWR, data dictionary, performance

14 comments

One of my most popular postings is about why your SYSTEM tablespace could be rather large. Recently I’ve had issues with a SYSAUX tablespace being considerably larger than I expected, so I thought I would do a sister posting on the reason.

The client I was working with at the time was about to go live with a new application and database. For various reasons I was a little anxious about how the Java application (the User Interface) would actually call the stored PL/SQL code I had helped develop. Initial workloads would be low and so I asked that the STATISTICS_LEVEL be set to ALL, so that bind variables (amongst other things) would be gathered. This is on version 10.2.0.4, btw, enterprise edition and 4-node RAC.

We went live, issues were encountered and resolved, the usual way these things work. Then, a few weeks in and when everything was still very “exciting” from a problem resolution perspective, I got an odd email from the DBA team. Would they like us to add another datafile to the SYSAUX tablespace. Huh? I checked. I’d been watching the size of our application’s tablespaces but not the others {well, I was not supposed to be a DBA and I was watching an awful lot of other things}. Our SYSAUX tablespace was around 160GB in size, having pretty much filled it’s 5th datafile. Why? I checked to see what was taking up the space in the tablespace:

select * from

(select owner,segment_name||'~'||partition_name segment_name,bytes/(1024*1024) size_m

from dba_segments

where tablespace_name = 'SYSAUX'

ORDER BY BLOCKS desc)

where rownum < 40

OWNER SEGMENT_NAME SIZE_M

------------------ -------------------------------------------------- ------------

SYS WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_3911 27,648

SYS WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_3911 26,491

SYS WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_3537 23,798

SYS WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_3537 22,122

SYS WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_4296 17,378

SYS WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_4296 16,818

SYS WRH$_ACTIVE_SESSION_HISTORY~WRH$_ACTIVE_14459270_3 136

911

SYS WRH$_SQLSTAT~WRH$_SQLSTA_14459270_3911 96

SYS WRH$_SQLSTAT~WRH$_SQLSTA_14459270_3537 72

SYS WRH$_SQLSTAT~WRH$_SQLSTA_14459270_4296 47

SYS WRH$_LATCH_MISSES_SUMMARY_PK~WRH$_LATCH__14459270_ 45

3537

SYS I_WRI$_OPTSTAT_H_OBJ#_ICOL#_ST~ 41

SYS WRH$_SYSMETRIC_SUMMARY~ 40

SYS WRH$_LATCH_MISSES_SUMMARY_PK~WRH$_LATCH__14459270_ 37

As you can see, almost all the space is being taken up by WRH$_LATCH_CHILDREN and WRH$_LATCH_CHILDREN_PK partitions. They are massive compared to other objects. A quick goggle did not come up with much other than many hits just listing what is in SYSAUX and the odd person also seeing SYSAUX being filled up with these objects and suggested ways to clear down space, nothing about the cause.

I had a chat with the DBAs and we quickly decided that this was going to be something to do with AWR given the name of objects – “WRH$_” objects are the things underlying AWR. The DBA suggested my settings of 15 minute intervals and 35 day retention was too aggressive. I knew this was not the case, I’ve had more aggressive snapshot intervals and longer retention periods on far busier systems than this. I did not have access to Metalink at that point so I asked the DBAs to raise a ticket, which they duly did.

Oracle support cogitated for a couple of days and came back with the advice to reduce the retention period. Hmmmm. Via the DBA I asked Oracle support to explain why those objects were so large when I had not seen this issue on several other systems. Was it a bug? I had by now corroborated with a friend from a previous site with 5 minute snapshot intervals and two months retention period and their SYSAUX tablespace was about 10GB all in. I did not want to go changing things if we did not know it would fix the issue as we really wanted to stop the growth of SYSAUX as soon as possible, not just try a hunch.

As you probably realise from the title of this blog, the issue was not the snapshot interval or retention period but the STATISTICS_LEVEL=ALL. The one and only hit you get in metalink if you search on WRH$_LATCH_CHILDREN is note 874518.1. From V10.1.0.2 to V11.1.0.7 setting this parameter to ALL is known to create a lot of data about Latch children and not clear it down when the AWR data is purged (Bug 8289729). The advice was to change STATISTICS_LEVEL and make the snapshot interval larger. I’d suggest you just need to alter the STATISTICS_LEVEL, unless you really, really need that extra information gathered. It seemed to take Oracle Support an extra day or two to find that note for us. {I’ve since checked out Metalink directly to confirm all this}.

So with a known issue we felt confident that altering the initialisation parameter would solve the issue. It took a while for us to change the STATISTICS_LEVEL on the production system – Change Control for that site is rather robust. This allowed us to see some other impacts of this issue.

The mmon process which looks after AWR data was becoming a top session in our OEM performance screens. In particular, a statement with SQL id 2prbzh4qfms7u that inserted into the WRH$_LATCH_CHILDREN table was taking several seconds to run each time and was running quite often {I include the SQL ID as it may be the same on many oracle V10 systems as it is internal code}:

This was doing a lot of IO, by far the majority of the IO on our system at the time – it was a new system and we had been able to tune out a lot of the physical IO.

The physical IO requirements and 15-20 second elapsed time made this out most demanding statement on the system

We also now started to have issues with mmon running out of undo space when it ran at the same time as our daily load. This was particularly unfortunate as it coincided in a period of “intense management interest” in the daily load…

What was happening to the size of the SYSAUX tablespace?

Enter the tablespace (or leave null)> sys

TS_NAME ORD SUM_BLKS SUM_K MAX_CHNK_K NUM_CHNK

-------------------- ----- ----------- ------------ ----------- --------

SYSAUX alloc 58,187,904 465,503,232 33,553,408 14

free 10,728 85,824 21,504 20

SYSTEM alloc 128,000 1,024,000 1,024,000 1

free 68,360 546,880 546,752 3

4 rows selected.

select * from

(select owner,segment_name||'~'||partition_name segment_name,bytes/(1024*1024) size_m

from dba_segments

where tablespace_name = 'SYSAUX'

ORDER BY BLOCKS desc)

where rownum < 40

OWNER SEGMENT_NAME SIZE_M

-------- ------------------------------------------------------------ ----------

SYS WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_6201 30262

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_5817 29948

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_5435 28597

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_4675 28198

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_3911 27648

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_5817 27144

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_6585 26965

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_6201 26832

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_4675 26741

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_3911 26491

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_4296 26307

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_5435 26248

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_4296 25430

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_6585 25064

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_5058 24611

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_5058 23161

WRH$_LATCH_CHILDREN~WRH$_LATCH__14459270_6966 9209

WRH$_LATCH_CHILDREN_PK~WRH$_LATCH__14459270_6966 8462

WRH$_SYSMETRIC_SUMMARY~ 152

WRH$_ACTIVE_SESSION_HISTORY~WRH$_ACTIVE_14459270_3911 136

WRH$_SQLSTAT~WRH$_SQLSTA_14459270_3911 96

@sysaux_conts

OWNER OBJ_PART_NAME SIZE_M

------------------------------ ---------------------------------------- ----------

SYS WRH$_LATCH_CHILDREN-WRH 231745.063

SYS WRH$_LATCH_CHILDREN_PK-WRH 215573.063

SYS WRH$_SQLSTAT-WRH 711.0625

SYS WRH$_LATCH_MISSES_SUMMARY_PK-WRH 439.0625

SYS WRH$_ACTIVE_SESSION_HISTORY-WRH 437.0625

SYS WRH$_LATCH_PARENT-WRH 292.0625

SYS WRH$_LATCH-WRH 276.0625

SYS WRH$_LATCH_MISSES_SUMMARY-WRH 273.0625

SYS WRH$_SEG_STAT-WRH 268.0625

SYS WRH$_LATCH_PARENT_PK-WRH 239.0625

SYS WRH$_SYSSTAT_PK-WRH 237.0625

Yes, that is close to half a terabyte of SYSAUX and it is all used, more partitions have appeared and the total size of the largest segments in SYSAUX show how WRH$_LATCH_CHILDREN and WRH$_LATCH_CHILDREN_PK make up the vast majority of the space used.

Shortly after, we finally got permission to change the live system. The impact was immediate, mmon dropped from being the most demanding session, that SQL code dropped down the rankings and the issues with running out of undo ceased.

I was anxious to see if the old data got purged, as the Metalink note had suggested the data would not be purged. Thankfully, that was not the case. The space was slowly released as normal purging of data outside the retention period took place and after just over a month, the SYSAUX tablespace contained a lot less information and was mostly free space:

OWNER OBJ_PART_NAME SIZE_M

---------- ---------------------------------------- ----------

SYS WRH$_LATCH_MISSES_SUMMARY_PK-WRH 512.0625

SYS WRH$_LATCH_MISSES_SUMMARY-WRH 350.0625

SYS WRH$_LATCH-WRH 304.0625

SYS WRH$_SQLSTAT-WRH 280.0625

SYS WRH$_LATCH_PK-WRH 259.0625

SYS WRH$_SYSSTAT_PK-WRH 247.0625

SYS WRH$_SERVICE_STAT_PK-WRH 228.0625

SYS WRH$_PARAMETER_PK-WRH 201.0625

SYS WRH$_PARAMETER-WRH 169.0625

SYS WRH$_SYSSTAT-WRH 169.0625

SYS WRH$_SEG_STAT-WRH 161.0625

SYS WRH$_SYSTEM_EVENT_PK-WRH 156.0625

SYS WRH$_SYSMETRIC_SUMMARY- 152

SYS WRH$_SYSTEM_EVENT-WRH 133.0625

SYS WRH$_SERVICE_STAT-WRH 123.0625

SYS WRH$_ACTIVE_SESSION_HISTORY-WRH 115.0625

TS_NAME ORD SUM_BLKS SUM_K MAX_CHNK_K NUM_CHNK

-------------------- ----- ----------- ------------ ----------- --------

SYSAUX alloc 58,251,904 466,015,232 33,553,408 15

free 57,479,400 459,835,200 4,063,232 1,208

SYSTEM alloc 128,000 1,024,000 1,024,000 1

free 68,048 544,384 544,320 2

Now, how do we get that space back? I left that with the DBA team to resolve.

Oh, one last thing. I mentioned the above to a couple of the Oaktable lot in the pub a few weeks back. Their instant response was to say “You set STATISTICS_LEVEL to ALL on a live system?!? You are mad!”

🙂

{Update, I’ve just spotted this posting by Colbran which is related. Last time I googled this I just got a stub with no information}

I am Neo off the Matrix (apparently) March 30, 2011

Posted by mwidlake in AWR, performance.Tags: AWR, perception, performance

8 comments

I know I have mentioned it before, but I am a big fan of the OEM performance screens that are derived from the ASH/AWR information. One of the things I really like about it is the immediate information it gives you, in one glance, that things are “not normal”. Once you notice that things are not normal you can then, within a few seconds, get a feel for what is still probably OK and where you have something that has changed.

As an example of the immediate information, I recently came back to my desk and glanced at my OEM performance screen. It was showing the below:

“data load has just ran” I said to my comrade-in-arms. “which one?” he asked. “The Delta – It ran the quick plan. But it started a bit late, 12:15. Oh, and looks like the transaction view code has swapped back to the full table scan plan and the summary code is not playing up at the moment.”

“you’re turning into Neo you are – can you see a lady in a red dress???” he asked.

That was of course a reference to the “Matrix” films where at times you see the virtual world displayed on a screen as a stream of characters running down the screen – but once you get used to it you can apparently “see” what is going.

The screen shot above is not even actually a very good example of what the performance screens can show you. One of my minor complaints about the performance screens is that it scales to show the greatest of the largest peak or a number of sessions to match the number of CPUs (real or fake) that are available to you. So if you have more CPU available than you need, you can’t see much detail in the graph. And if you have had a nasty peak of activity, again, all detail is squeezed out. In my case, the box is sized to cope in 12 months and the system is new, so activity is scuttling along the bottom of the graph.

However, “poor” though the example is, it told me what was going on across my system at a glance, something about the major tasks we are running, that one problem is currently occurring and that several of the other issues I need to keep an eye out for are not occurring.

That is why I love these screens – I recognise “my” activity patterns from the graph, I now recognise the SQL IDs for my key statements. If I see a pattern in the graph I don’t recognise, I need to check things out immediately. Three or four times over the last 2 weeks I have spotted an issues, started investigating and found out the cause before the Operations desk has even noticed an issue.

Oh, and what is SQL type 189? It is a merge statement. Our implementation of OEM is a little old, it does not correctly interpret that SQL command type. It might be a little old, it is still a lot useful.

Missing SQL in AWR/ASH February 23, 2010

Posted by mwidlake in AWR, performance.Tags: AWR, bug, data dictionary, performance

3 comments

I’ve been working on some demonstrations of AWR/ASH within OEM for a client and I’ve come across a situation where you can be missing the SQL (and thus a lot of the useful information) for code run via DBMS_JOB or DBMS_SCHEDULER.

This is due to bugs 5928612.8 for DBMS_JOB and 5140631.8 for DBMS_SCHEDULER. If you can’t access metalink, then check out the end of this webpage. The SQL_ID/SQL_HASH_VALUE is not populated in V$SESSION for those jobs running under DBMS_JOB or DBMS_SCHEDULER. I am presuming from the fact that V$SESSION is not updated with these values, it is not available to V$ACTIVE_SESSION_HISTORY.

I did check that the SQL_ID was null in V$ACTIVE_SESSION_HISTORY for the records that connected to the USER_ID I was using, except for the handful of entries for when I was testing my code manually before running it via DBMS_JOB. The SQL_ID was captured fine for these interactive sessions (and the code executed was identical).

The bugs appear in 10.1.0.2 and both are fixed in 10.2.0.4 and 11. Both have patches available.

I am using 10.2.0.3

As soon as I mentioned my woes about not being able to see the SQL for code I was running via DBMS_JOB I knew of the existence of the bugs, as colleagues mentioned being aware of them as the patches have been applied to a couple of the other systems. However, I could find no trace of them on Metalink or Google.These bugs are listed as against v$session, not AWR or ASH, which is why I could not find them via searches for “missing sql awr” or missing sql ash” etc. So hopefully this posting will allow the connection to be made.

I find it very vexing when you know there is a fix for an oracle problem but you can’t find it in metalink (which seems even more true with the new, all-singing all-dancing all-fancy-graphics little help metalink). Sometimes it is because the bug number is not being made publically available and in other cases, such as this one, it is because no one has made the connection between the initial problem and other things it could impact. I guess it is asking a lot of Oracle Corp to do this for us and it would be unreasonable of us to hold it against them – But they are supposed to be a blooming data, information and knowledge expertise company! Tsch.

As an example of what I see in AWR with my workload, generated via DBMS_JOB {and I’m sorry it is so small, you should be able to click on it and get a full-size version}:

Notice that the top five jobs are via user MDW_2. I know that none of the top SQL on the left is from my workload (you will have to just take my work, but it is, in fact, all the “OEM/AWR code 🙂 ). Also, the top code shown is heave on green for CPU, but you can see most of the workload in the Top Activity and the Top Sessions is blue, for User IO.

If I pick out the top session, 545, and click on it I get the below screen:

Note the entry for SQL_ID is blank and SQL Command is “UNKNOWN”. I can see what it is doing (db_file_scattered read and some CPU) but not what on or why. 😦